When it comes to getting your website visible on Google’s search engine, it’s important to understand how their web spiders work.

Google sends these web spiders, also known as bots or crawlers, to crawl through every page on your website and index them in its massive database. However, if your website is not properly crawled and indexed, it may not appear in search engine results.

Unfortunately, many web developers and site owners fail to optimize and structure their websites in a way that makes it easier for Google’s bots to crawl. This can lead to crawlability problems, which can cause your website’s pages to be excluded from search engine results.

If you’re experiencing crawlability problems, there are several steps you can take to fix them. In this article, we will discuss everything you need to know about Google Crawlability Problems and provide you with some tips on how you can optimize your website to improve its crawlability.

Page Contents:

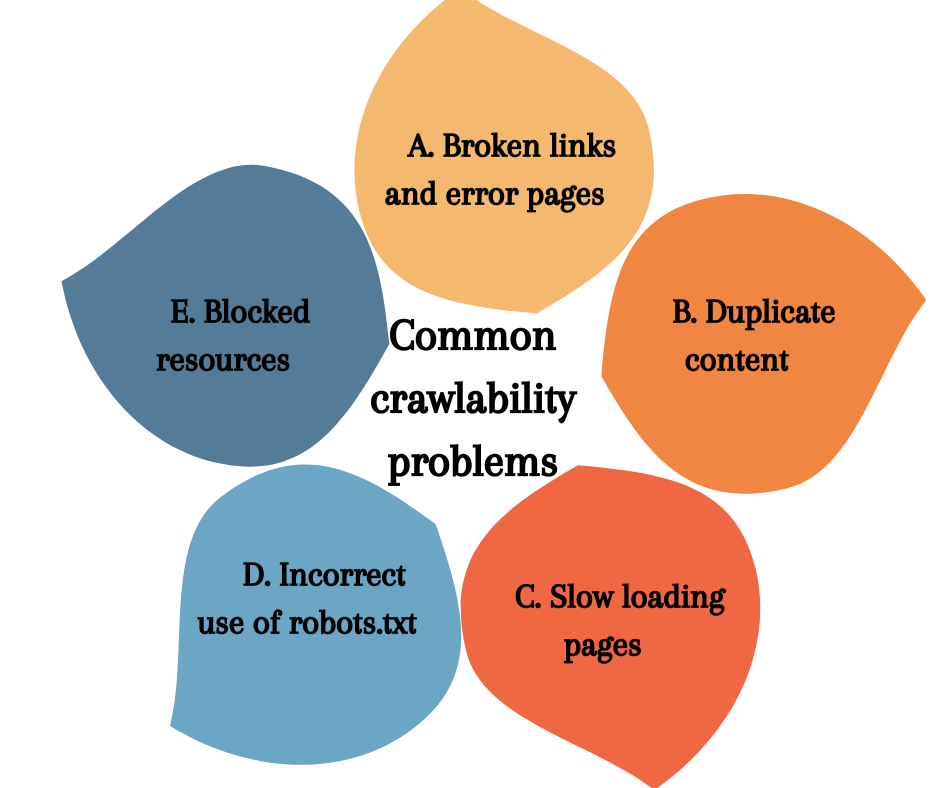

Common crawl ability problems

There can be hundreds of small or big issues hindering web spiders from crawling your website. here is the list of the 5 most common crawlability problems that can be prevented for better indexing.

1. Broken links and error pages

Broken links are an issue that can cause frustration for users and negatively impact a website’s search engine optimization (SEO). When a hyperlink leads to a page that no longer exists, users are met with an error message and are unable to access the intended content. This can be due to a variety of reasons, such as the page being deleted, expired, or moved to a different URL.

From an SEO perspective, broken links can be detrimental to a website’s ranking. When crawlers encounter broken links, they are unable to properly index the linked content, leading to a decrease in visibility and ultimately lower rankings. Additionally, too many broken links can signal to search engines that a website is poorly maintained, which can further hurt its SEO performance.

2. Duplicate content

When designing a website, it’s important to consider the impact of similar content on your search engine optimization (SEO). Having multiple pages on your site with similar content can confuse search engine crawlers, who may have trouble determining which page to index.

This can result in your main page not being indexed while copies are, leading to a lower search engine results page (SERP) ranking and reduced visibility. Furthermore, excessive duplication of content can harm your website’s reputation with search engines, causing them to view your site as low-quality and reducing your potential to rank highly in search results.

3. Slow-loading pages

When search engine crawlers visit a website, they have a limited amount of time known as a crawl session. During this time, they try to crawl as many pages as possible to index them for search results.

However, if your website has slow-loading pages, there is a high chance that the crawlers might miss out on important pages. This is because crawlers cannot spend an unlimited amount of time and resources on your website.

They are restricted under a crawling budget, which means that they have to prioritize crawling faster pages. As a result, your slow-loading pages act as barriers to your website getting completely crawled and indexed. This can negatively impact your website’s search engine visibility and performance.

4. Incorrect use of robots.txt

The Robot.txt file is a crucial component of the Search Engine Optimization (SEO) strategy that guides the search engine crawlers to navigate through your website.

It works as a roadmap for the crawlers to understand which pages they can access and which pages they should not. However, it’s essential to plan the robot.txt file correctly; otherwise, it may mislead the crawlers, leading to several issues.

For instance, if you don’t configure the robot.txt file correctly, important pages that you want to be indexed may not get indexed, while some pages that you don’t want to be indexed may end up on the list. This confusion may lead to a lower ranking, as crawlers may not be able to locate and index the pages that matter, leading to a lower search engine ranking.

5. Blocked resources

It is possible to block certain URL parameters through the webmaster tool. By doing so, crawlers will be prevented from accessing the corresponding pages. This can lead to an incomplete crawl of your website, which in turn can result in indexing issues.

For instance, if a page that should be indexed is not crawled by the search engine bots, it may not show up in the search results. Therefore, it is important to be cautious when blocking URL parameters and to ensure that you are not inadvertently blocking important pages that should be crawled and indexed.

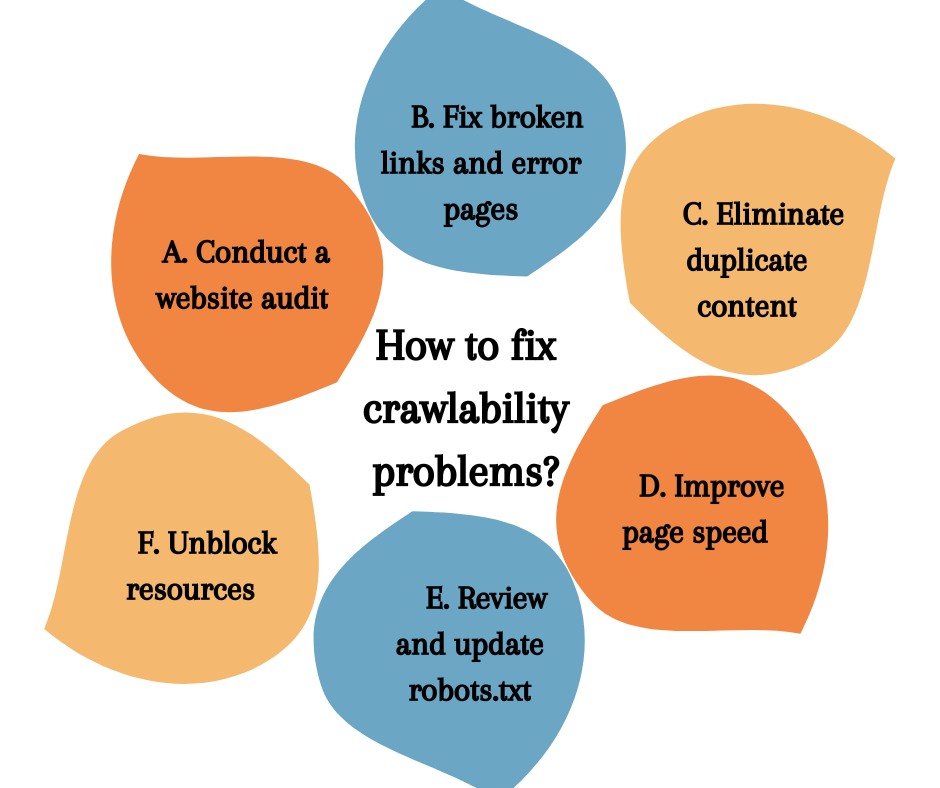

How to fix crawlability problems?

Website crawlers are automated bots that work by following pre-structured crawling criteria. To ensure that they can effectively crawl and index your website, it is important to take certain measures and follow best practices. Let’s explore some of how you can address crawlability issues and optimize your website for search engines.

1. Conduct a website audit

Conducting a thorough website audit should be your first step. This will help you identify any technical issues that may be hindering the crawling and indexing process. Once you have identified the issues, you can then work on fixing them.

2. Fix broken links and error pages

Broken links and error pages can be a major hindrance for search engine crawlers. It is important to fix these as soon as possible to ensure that your website is being fully crawled and indexed.

3. Eliminate duplicate content

Duplicate content can also be problematic as it can confuse search engines and lead to lower rankings. By eliminating duplicate content, you can ensure that your website is being effectively crawled and indexed.

4. Improve page speed

Another important factor to consider is page speed. Slow loading times can negatively impact your website’s crawlability and indexing. By optimizing your website’s code, compressing images, and using a content delivery network (CDN), you can improve your page speed and enhance your website’s crawlability.

5. Review and update robots.txt

Your website’s robots.txt file is another important element to consider. This file tells search engine crawlers which pages to crawl and which to avoid. By reviewing and updating your robots.txt file, you can ensure that your website is being crawled and indexed effectively.

6. Unblock resources

Finally, be sure to unblock any blocked resources that may be preventing search engine crawlers from accessing your website. This can include CSS and JavaScript files, images, and videos. By unblocking these resources, you can improve your website’s crawlability and indexing, and ultimately enhance your search engine rankings.