Technical SEO is about improving the technical elements of the page or website. It mainly deals with the functionality of a website, including the security, page speed aspects, etc. The daily changing and confusing SEO elements make it prone to mistakes.

As a result, there are a lot of issues while doing the technical SEO. Could we talk about the Common Technical SEO Issues? Let’s start with understanding what technical SEO is.

Page Contents:

What Is Technical SEO?

Technical SEO is all you do to optimize your website’s function for SEO and user experience. It has an influential impact on website performance.

Some technical SEO tasks are improving site speed, submitting, creating site maps, making SEO-friendly, etc. Technical SEO is responsible for your site’s easy navigation and smooth working.

Technical SEO attracts organic traffic and it attracts search engine crawlers as well. Hence your site is indexed and ranked properly. Let us now move on to discuss the common mistakes of technical SEO.

Common Technical SEO Issues

Technical SEO is a tough task. It would help if you were focused and conducted site audits regularly. There are many issues that you may notice. Here we will discuss the most common ones with the solutions to fix them:

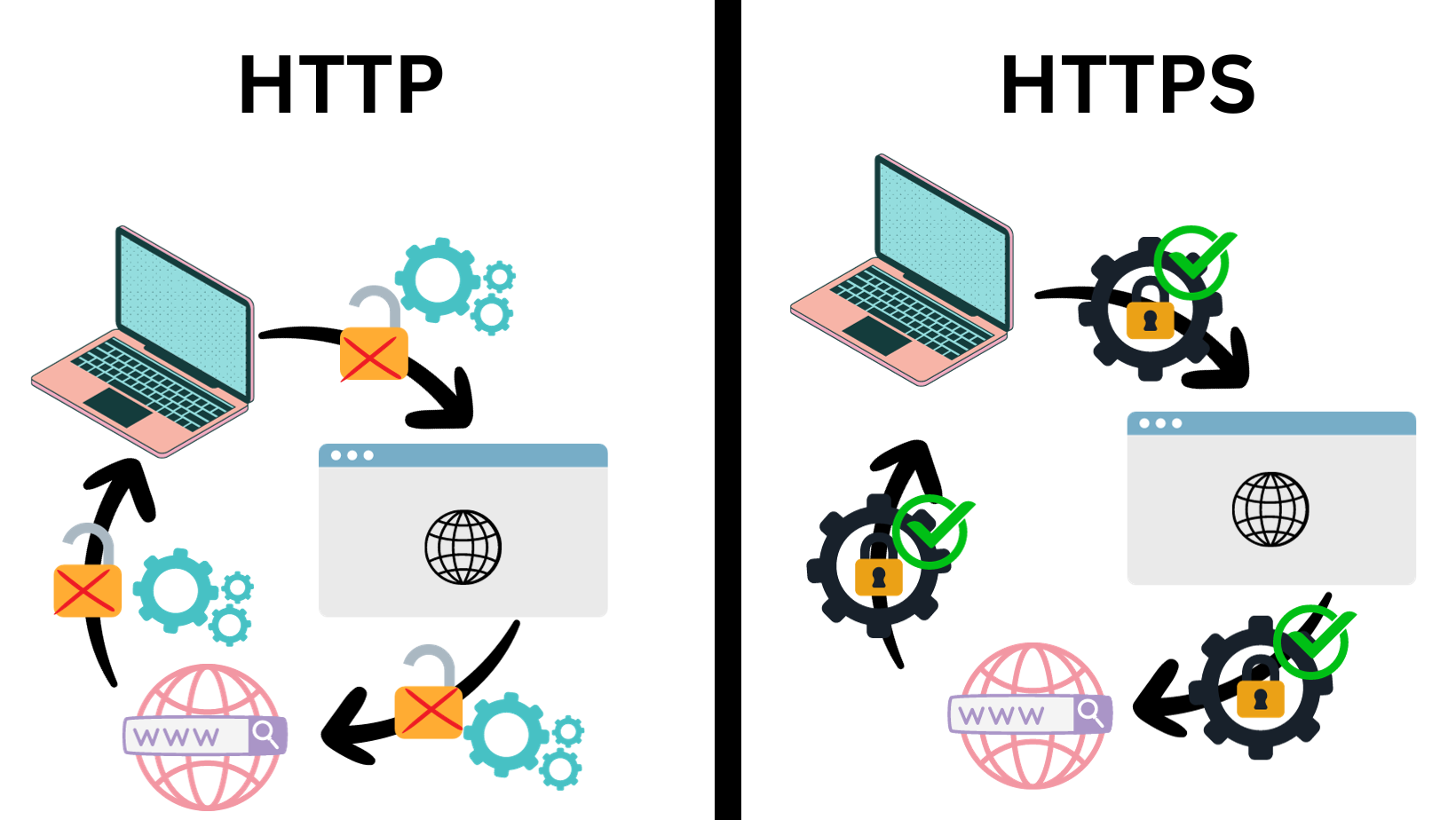

1. HTTPS security

Many of the sites still do not have HTTPS security. The HTTP site is not secured and encrypted. HTTPS is a ranking factor according to Google. HTTP status is the most common technical issue with a website. Using the HTTP version may lead to errors like 4xx, not crawled pages, broken internal links, etc.

The advantages of using the HTTPS security version are it is encrypted, protects users’ data, and improves user experience. Getting your site HTTPS first needs an SSL and TLS certificate.

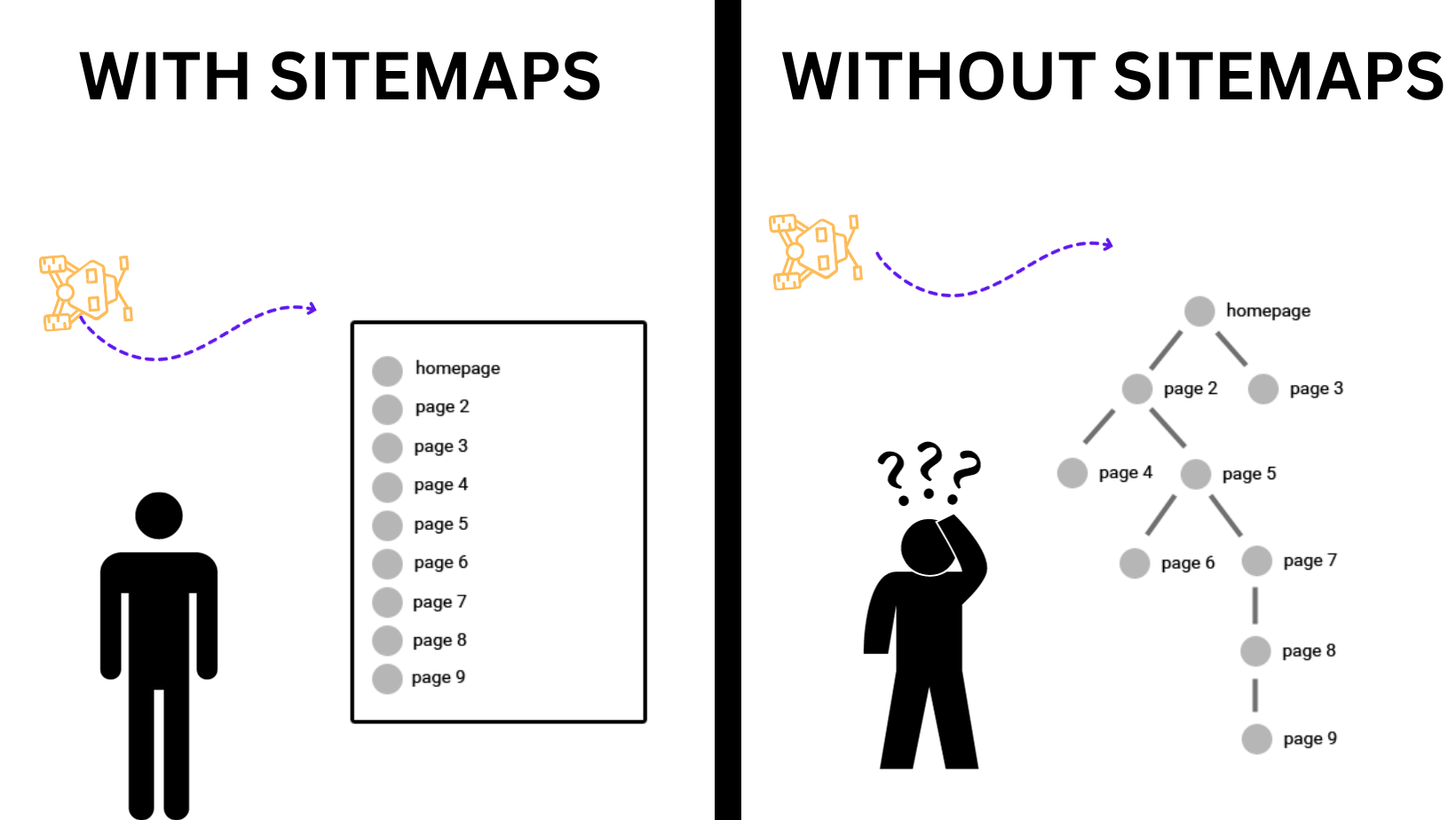

2. XML sitemaps

XML sitemaps help search engines understand what your pages are all about. These are an essential part of technical SEO. developer often ignores the need to submit sitemaps to search engines to crawlers.

It describes the page’s content, highlights the important pages, updates, and removal of pages. Hence submitting sitemaps ensures that your site is properly indexed with all the pages and that search engines have the latest version.

They are listing pages with 4xx errors. This means the pages with ineligible URLs are listed. The URL may be no longer valid or may not have an indexing tag or are redirecting pages etc.

The sitemap is not visible or inaccessible to crawlers. The issue may occur if the sitemap has a robot.txt file. Search engines will stop reading your sitemaps if they are unable to crawl after several attempts

The next problem is with the size of the site map. There is a fixed size limit of 50 MB and 50,000 URLs is the maximum limit. Your sitemap should not be beyond this size. However, if you submit sitemaps with very little content that is also a problem.

Solutions:

To fix all the issues start with your sitemap auditing. You can use Google’s sitemap reports, SEMrush, or PowerMapper tool for editing. For size-related issues compress your sitemap file again and again.

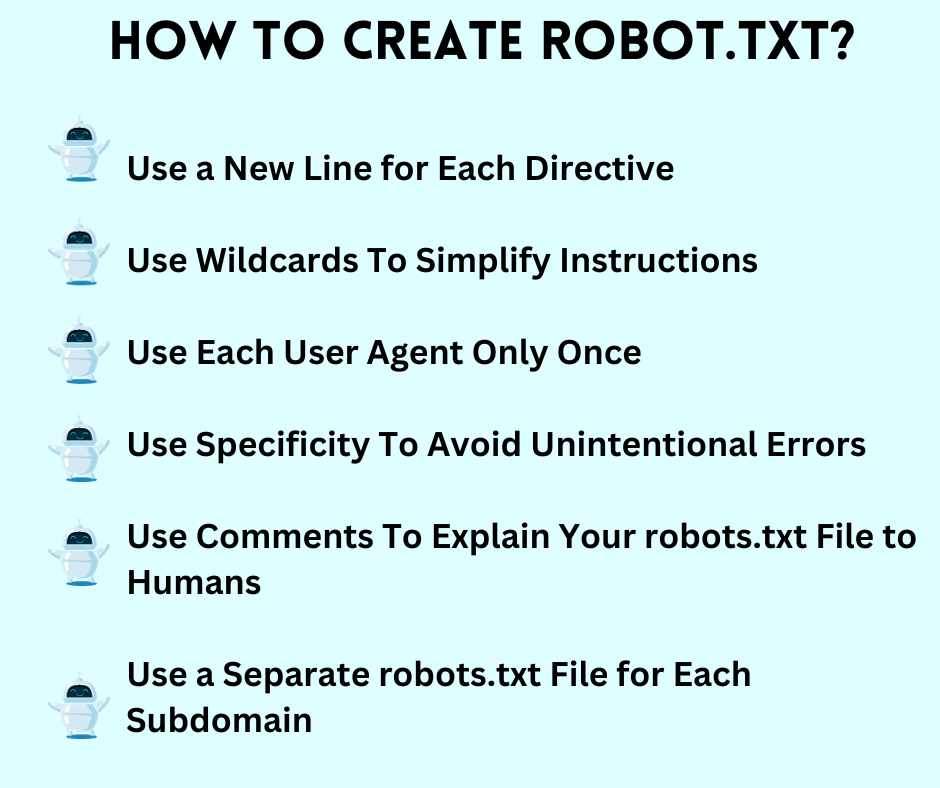

3. Robot.txt file

The robot.txt file is the instruction guide for search engines. They tell search engines which URLs are crawlable. These files are designed to avoid the overloading of crawl requests by search engines. The biggest mistake that can happen is not having a robot.txt file at all.

If there is a robot.txt file then it may not be placed properly. Sometimes it may be hidden in subfolders making it invisible to search engines. Another issue is adding no index tags. Google has stopped restricting the crawlers. So if you want to prevent some pages from getting indexed use some alternative method to do so.

Solution:

Make a proper robot.txt file and place it in the root directories of your website. Add minimal wildcards. use robot meta tags to prevent unwanted indexing

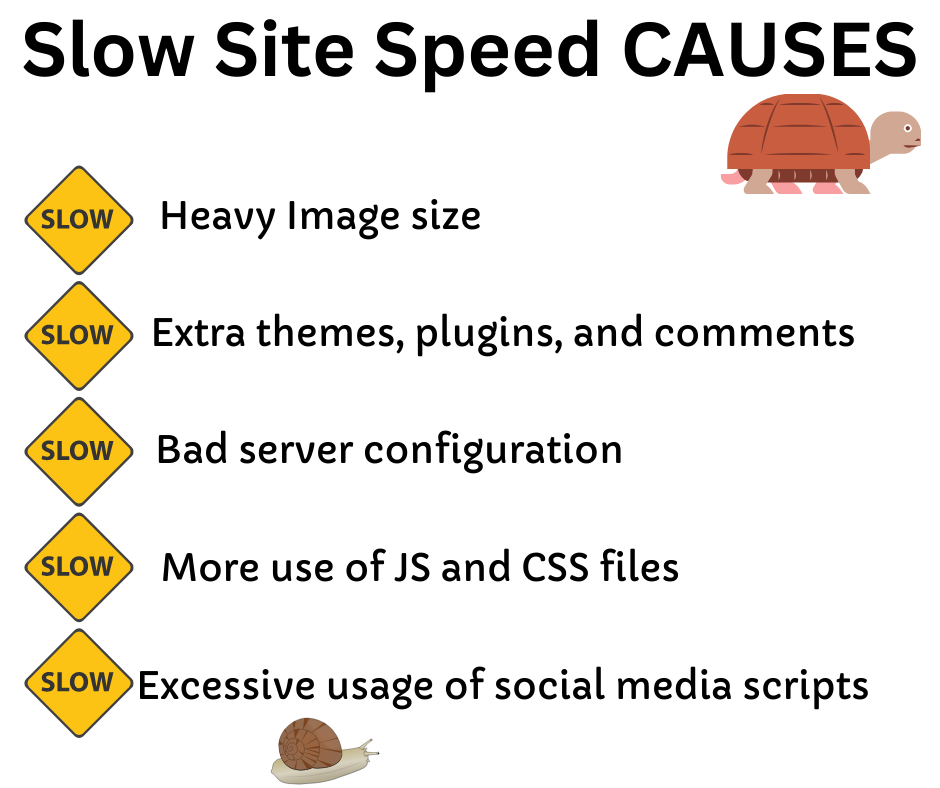

4. Slow Site Speed

Page loading speed is one of the most crucial factors for technical SEO. According to Google, the ideal load time is under one minute and it should not exceed 2-3 seconds. Some facts by pagetraffic.com:

- If the site speed is 5 seconds then it is faster than 25% of the websites

- If the site speed is 2.9 seconds then it is faster than 50% of the websites

- If the site speed is 1.7 seconds then it is faster than 75% of the websites

- If the site speed is 0.8 seconds then it is faster than 94% of the websites

If your site response is more than 2 seconds Google will reduce the number of crawls which will adversely affect your SERP ranking this also affects the user experience. It was observed in a survey that people leave the site in just 3 seconds. Hence slow site speed is a threatening issue for SERP rank seekers.

Solution:

The most effective solution for this issue is Google Page Speed Insight. The tool tracks and measures every page speed and sends alerts when finds an unoptimized page. If you are a WordPress user then you must choose a good hosting provider.

Compress and optimize the media content. Use content delivery networks as they help reduce the loading time. Last but not least use responsive designs so that your site doesn’t take a long time to load on different devices.

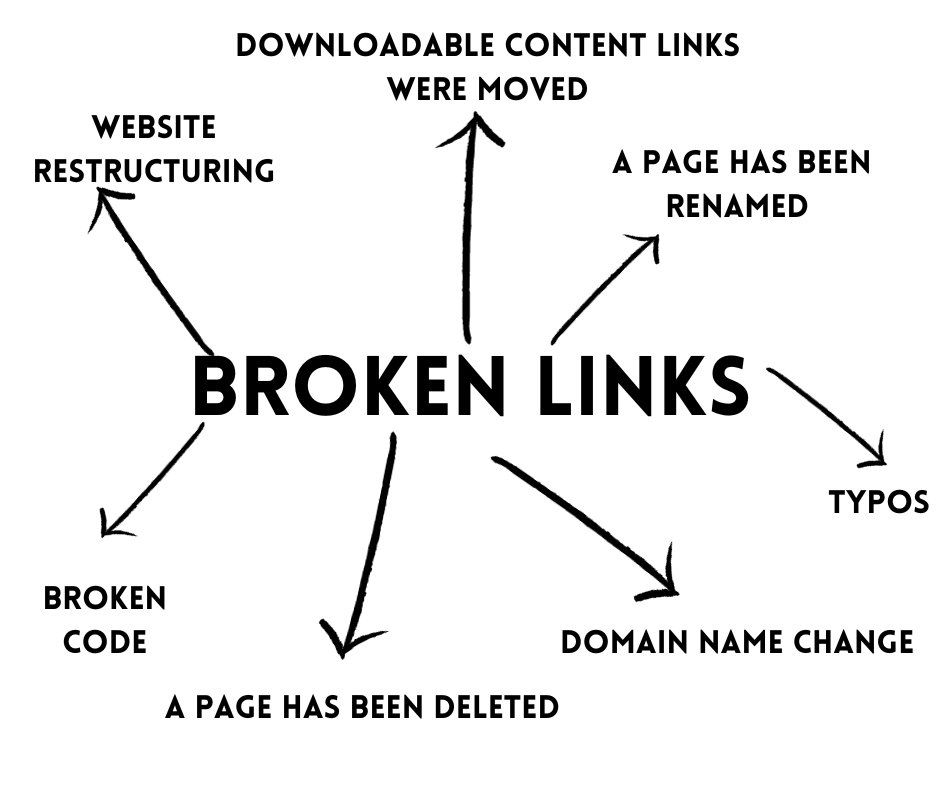

5. Broken links

Broken links are a big threat to page reputation and user experience. Broken links don’t directly affect the SERP ranking but give negative feedback to the web crawlers. This will increase the bounce-back rate and reduce the time spent on the site. Hence, search engines will consider the page unreliable.

Broken links lead to user disappointment and affect revenue. The users coming to your site are potential customers, if they cannot reach the pages, this will directly affect the ROI and disturb the regular traffic flow.

Solution:

Update your content and check for typos. Conduct site audits to find backlinks. One of the best solutions to fix this is using redirects. Use 301 redirects to fix broken links. In this way, you will be able to solve the issue without disturbing other links.

Delete or replace bad links with valid ones. There is no need to fix all the broken links on the website. Prioritize pages with high authority and fix the broken links of those pages that add value.

6. Mobile Experience

The majority of the searches are now through mobile devices. A responsive design that can optimize itself as per the needs of the device. If your website is not optimized for mobile phones then you will lose a lot of traffic.

The bounce-back rate will increase, the conversion rate will decrease and users will have a bad experience. Optimizing your site for mobile devices is a necessity. Google uses a mobile-first approach for ranking and indexing.

Some issues related to mobile accessibility are unresponsive buttons and links, poor navigation and site structure, and excessive pop-ups and ads. Some mobile websites have very small icons making it difficult for users to use.

Content formatting for mobile website many times require horizontal scrolling. Images and videos are slow and many more such issues may occur.

Solution:

Conduct a mobile optimization test using any of the SEO tools. Optimize the content formatting and avoid horizontal scrolling. Make sure your icons are enough for smooth navigation.