Duplicate content refers to the situation where similar or identical content appears on different web pages, or even on the same website. It’s important to understand that having duplicate content on your website can have a significant negative impact on your search engine ranking.

When Google or other search engines crawl your website and detect duplicate content, they may penalize your website by lowering your search engine results page (SERP) ranking. This can result in a significant decrease in traffic to your website, which can ultimately harm your business.

Duplicate content can also affect your website’s credibility and reputation. If your website contains duplicate content, your audience may not trust your content, and they may look for other sources of information. This can result in a loss of credibility and negatively impact your brand image.

Page Contents:

Importance Of Avoiding Duplicate Content

As a website owner or content creator, it’s crucial to understand the negative impacts of having duplicate content on your website. One of the most significant consequences is not getting indexed by search engines, especially Google, which is very strict about this issue.

By addressing duplicate content issues on your website, you can improve your search engine ranking, build trust with your audience, and enhance your overall online reputation.

Search engines prefer unique and high-quality content as answers to users’ queries. Therefore, if you have duplicate content on your website, it will be challenging for search engines to identify which page to rank higher, resulting in lower SERP rankings and less traffic.

Another significant impact of having duplicate content is losing backlink density. Backlinks are one of the essential factors that search engines use to evaluate the relevance and authority of a website.

However, if you have similar content on multiple pages, the backlinks will be divided between them, weakening the strength of each page. This can also lead to a decrease in the credibility of your website.

These factors can lead to less traffic on all similar content, lower search engine rankings, and ultimately, less return on investment (ROI) for your website. Therefore, it’s crucial to avoid duplicate content and ensure that your website provides unique and high-quality content to your audience.

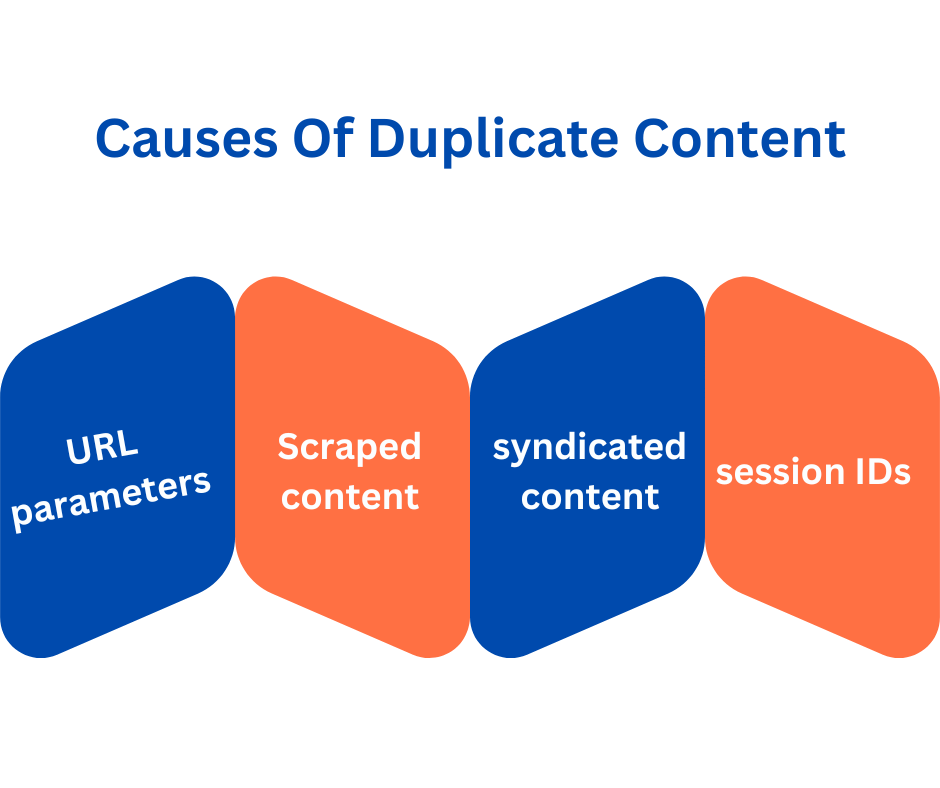

Causes Of Duplicate Content

There are two types of duplicate content one that is content similar to some other website’s page is called external duplication. The other is internal duplication where the content of two or more articles is similar to each other.

Some causes of internal duplication include session IDs. The session IDs are the unique codes assigned to each user session on a website. Suppose 100 different users go on the website so there will be 100 different URLs generated with 100 unique session IDs.

This becomes a cause for internal content duplication. URL parameters are another way of tracking a user’s session and activity. They are the question-asked URLs with some special characters forming a unique URL.

If such URLs are pointing to the same page but have different tracking parameters then search engines will consider each URL a new link.

The external duplication may be caused by scraped content. Scraped content is a stolen content. Many times content is scraped out or stolen and Google is not able to detect the original content. In such cases as a site owner, you should be paying attention or preventing your content from getting stolen.

If your content gets republished by some other domain it is called syndicated content. This can help you increase your reach but search engines will notice and may remove such pages, counting them as duplicate content.

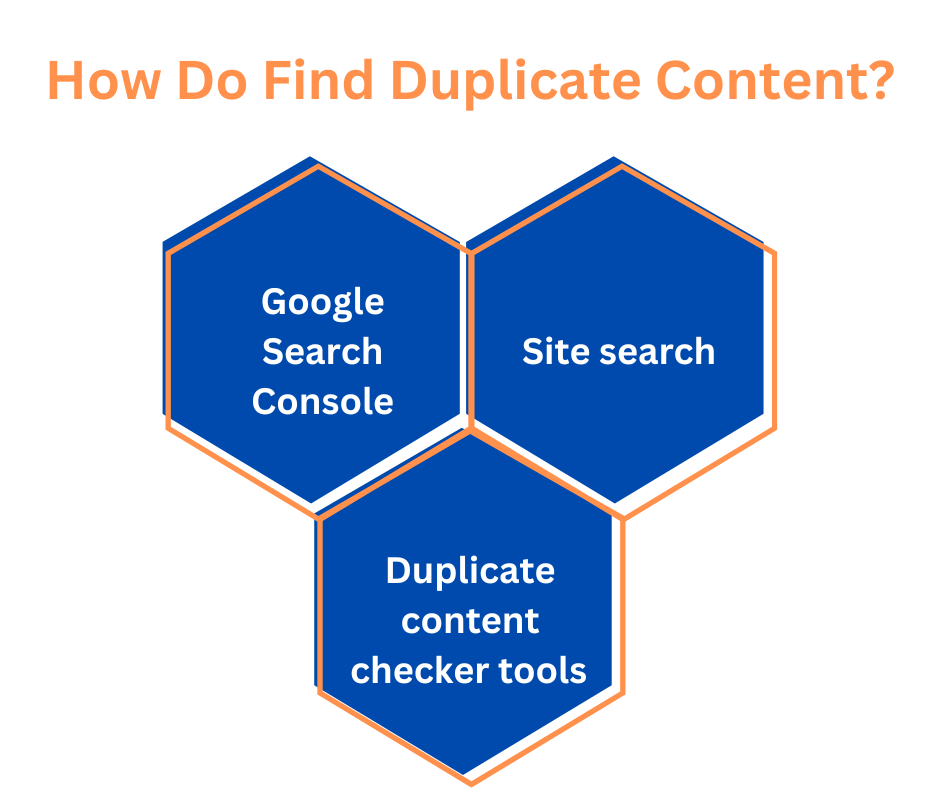

How Do Find Duplicate Content?

Finding duplicates is a very crucial and time-consuming task. However, some tools can help you to cut down the process. Let us see how they can help:

Google search console

Google Search Console is an incredibly useful and free tool that webmasters can use to identify and address various issues that may lead to duplicate content on their websites.

These issues may include having different versions of the same URL, such as HTTP or HTTPS, or WWW or non-WWW versions.

Duplicate content can negatively impact your website’s search engine rankings, and that’s why Search Console is such an essential tool.

By analyzing the insights you gain from the Search Console, you can identify and address any duplicate content issues, ensuring that your website’s content is optimized for search engines.

Site search

If you suspect that there is duplicate content on a website, there is a simple and effective method to confirm your suspicion.

All you need to do is type the name of the website on the search bar of any search engine and hit enter. The search results will show you if there are any duplicate pages on the website.

You may find the same content appearing on two different URLs. This could indicate that the website has duplicate content, which can negatively impact its search engine rankings.

By identifying and removing duplicate content, website owners can improve their website’s search engine optimization (SEO) and provide a better user experience for their visitors.

Duplicate content checker tools

There are numerous tools available online that can help you check for duplicate content. However, it can be a daunting task to choose the right one. To make things easier for you, we have compiled a list of the top 5 duplicate content checker tools.

1. Copyscape

One of the most popular plagiarism checker tools is Copyscape. It allows you to check the percentage of copied or duplicate content on your webpage or website.

The tool can also report which content has been copied from your page and where. Additionally, they offer a WordPress plugin that can help you check your website’s duplicate content.

2. Duplichecker

Another great duplicate content checker tool is Duplichecker. It’s a favorite among many and provides 50 plagiarism text checks per day for free. Furthermore, you can also download the file to keep a record of the duplicate content.

3. Sideliner

Sideliner is another tool that helps you avoid plagiarism by checking for duplicate content. It’s a simple and user-friendly tool that allows you to copy and paste your content to check for plagiarism.

4. Grammarly

Grammarly is a popular grammar checker tool that also has a built-in plagiarism checker. It can help you identify and fix grammatical errors while also checking your content for plagiarism.

5. Plag-Spotter

Plag-Spotter is a reliable tool that checks for duplicate content and provides a detailed report of the copied content. It’s a paid tool, but it offers a free trial to test its features before making a purchase.

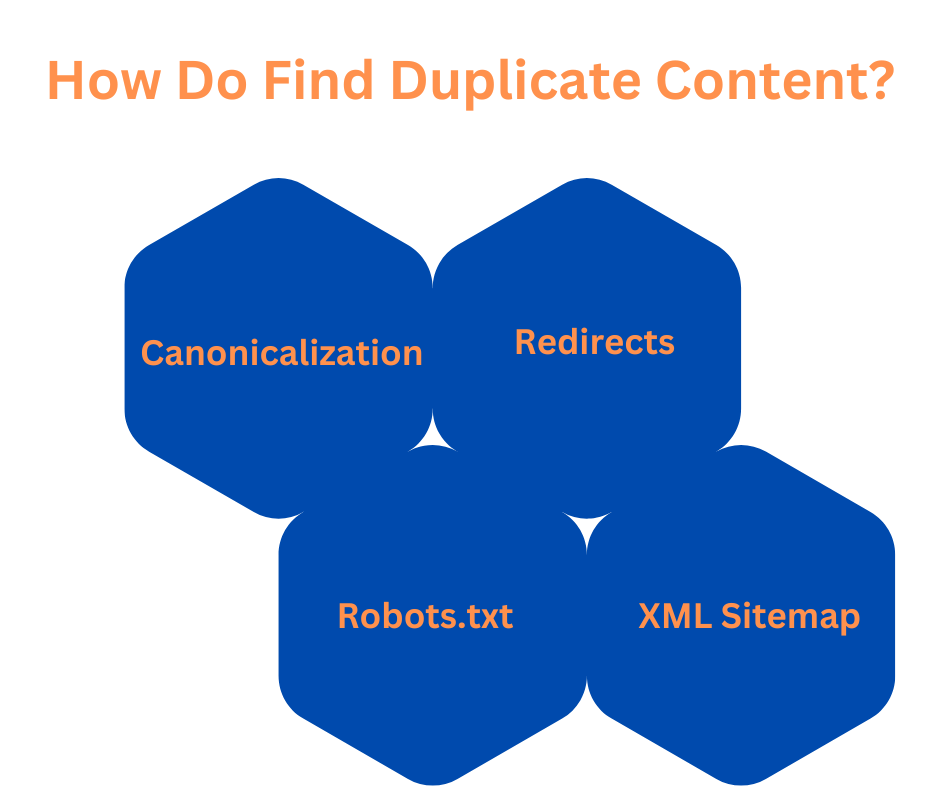

How to Avoid And Fix Duplicate Content?

To avoid duplicate content, it’s important to ensure that each page on your website offers unique and valuable content. This means avoiding copying content from other websites or even from your website.

If you must reuse content, make sure to attribute the source and add value to the content by adding your insights and perspectives.

If you discover that your website contains duplicate content, it’s essential to take steps to fix it as soon as possible. This can involve removing duplicate content or using canonical tags to inform search engines which version of the content is the source.

Avoiding duplicate content is better than fixing it. There are some easy preventive measures you can take to avoid duplicate content. The best way is to guide the search engine to the original content you created. Let’s see some ways to guide search engines to avoid duplicate content from getting indexed.

Canonicalization

Canonical URL is a term used in search engine optimization to identify the preferred version of a webpage among duplicates. This is especially useful when the same content appears on different URLs, which can confuse search engines and lower your ranking.

In such cases, a canonical URL is used to inform search engines of the most authoritative or original version of the content, allowing them to index it accurately and avoid penalizing you for duplicate content.

To ensure the authenticity of your content, you can use cross-domain canonicalization. This involves linking all duplicate pages to the preferred or canonical version of the content, regardless of the domain or URL structure. By doing so, you can consolidate link equity and avoid diluting your page authority.

Canonicalization is one of the most effective ways to avoid duplicate content and boost your SEO. It involves adding one-liner codes in your pages’ HTML code, which are invisible to visitors but seen by search bots.

These codes communicate the preferred version of your content and help search engines understand how to index it correctly.

Redirects

In the field of website development and search engine optimization, it is highly recommended by industry experts to use 301 redirects as a way to prevent the issue of duplicate content.

This technique is known to inform both users and search engine bots that a particular page has either been moved to a new location or has been permanently deleted.

Duplicate content is a significant issue that can negatively impact the website’s ranking on search engine result pages. It occurs when the same content appears on multiple URLs, which can confuse search engines.

This is where 301 redirects come into play, as they help to redirect users and search engine bots to the correct page.

By implementing 301 redirects, users and search engine bots are redirected to the correct URL, which in turn helps to maintain the website’s overall SEO performance and user experience.

This method is considered to be one of the most effective and widely used techniques when it comes to resolving the issue of duplicate content on a website.

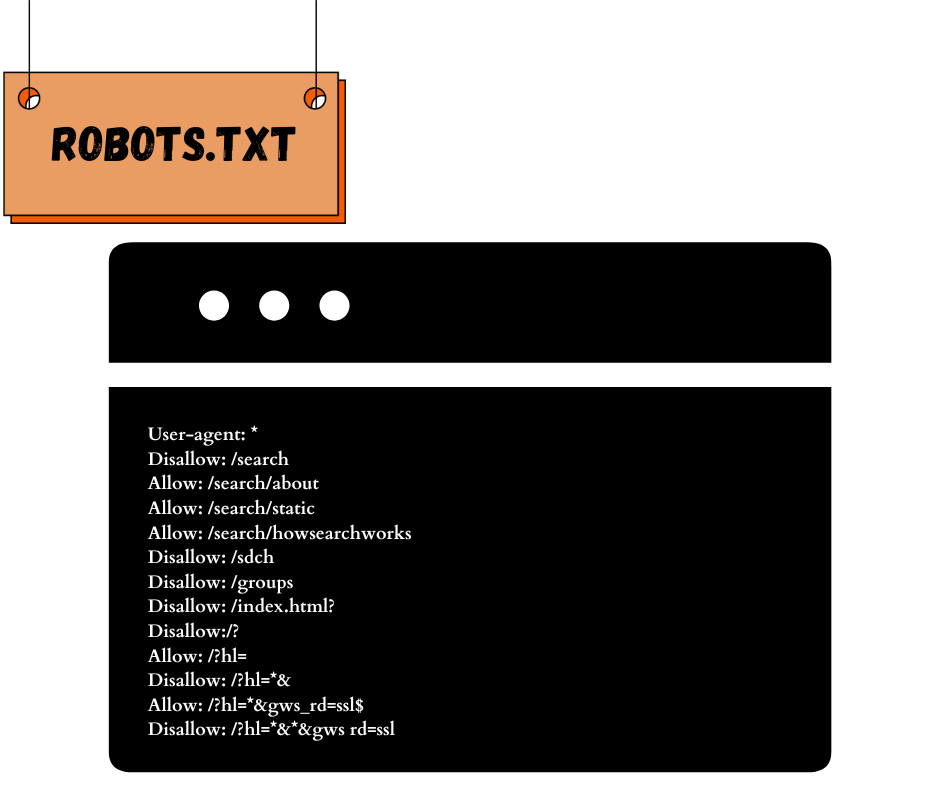

Robots.txt

The “robots.txt” file serves as a guide for search engine crawlers, providing instructions on which pages of a website should be crawled and indexed. One common use of the “robots.txt” file is to prevent crawlers from accessing duplicate content, which can negatively impact a website’s search engine rankings.

By setting “robots.txt” tags in the header of a webpage, webmasters can effectively block search engine indexing of duplicate content, ensuring that only the original version of the content is indexed.

This helps to improve the website’s search engine visibility and rankings, while also preventing any potential penalties that could result from duplicate content issues.

XML Sitemap

If you have a website with a lot of pages, some of your content might unintentionally be duplicated across multiple pages. This can negatively impact your website’s search engine rankings and overall user experience. However, using XML sitemaps is one way to avoid and fix duplicate content.

These sitemaps provide search engines with a list of all the pages on your website, helping them to identify and eliminate any duplicate content. By including an XML sitemap on your website, you can improve your website’s search engine optimization and ensure a better user experience for your visitors.